Thoughts on GPT-5, why AI hasn't hit a wall, and society's growing emotional dependency on chatbots

OpenAI released GPT-5 last Thursday (Aug 7th, 2025), and tech Twitter and Reddit have been crazy since then. There are some impressed people, some enraged people, and some super sad because they lost (and recently recovered) their beloved gpt-4o.

Throughout the weekend, I read thousands of tweets, hundreds of Reddit posts, etc, and my mind was not completely organized, so I decided to write, because that is what I always do when I need to find clarity on my thoughts.

I'm going to explore three parallel stories here—thoughts on GPT-5, the pace of AI progress, and the weird social dynamics emerging—and they'll all converge into one conclusion.

It may start with some obvious statements, but if you're well-versed in AI, don't get bored—keep reading, and things will click. Or jump to section 2 if you prefer.

Let's get started.

1. Thoughts on GPT-5

There had been amazing LLM models released in 2025, and being fair, I don't think (but do understand) GPT-5 launch deserves the huge attention it has received. Unlike in 2023, when OpenAI had a clear lead in the AI path, that is just not the case anymore (they're basically neck-and-neck with other labs now, and have clearly lost a lot of their great talent and researchers). There had been some huge model launches this year that presented impressive results, and I think GPT-5 is just one of them.

But let's get into the model performance directly:

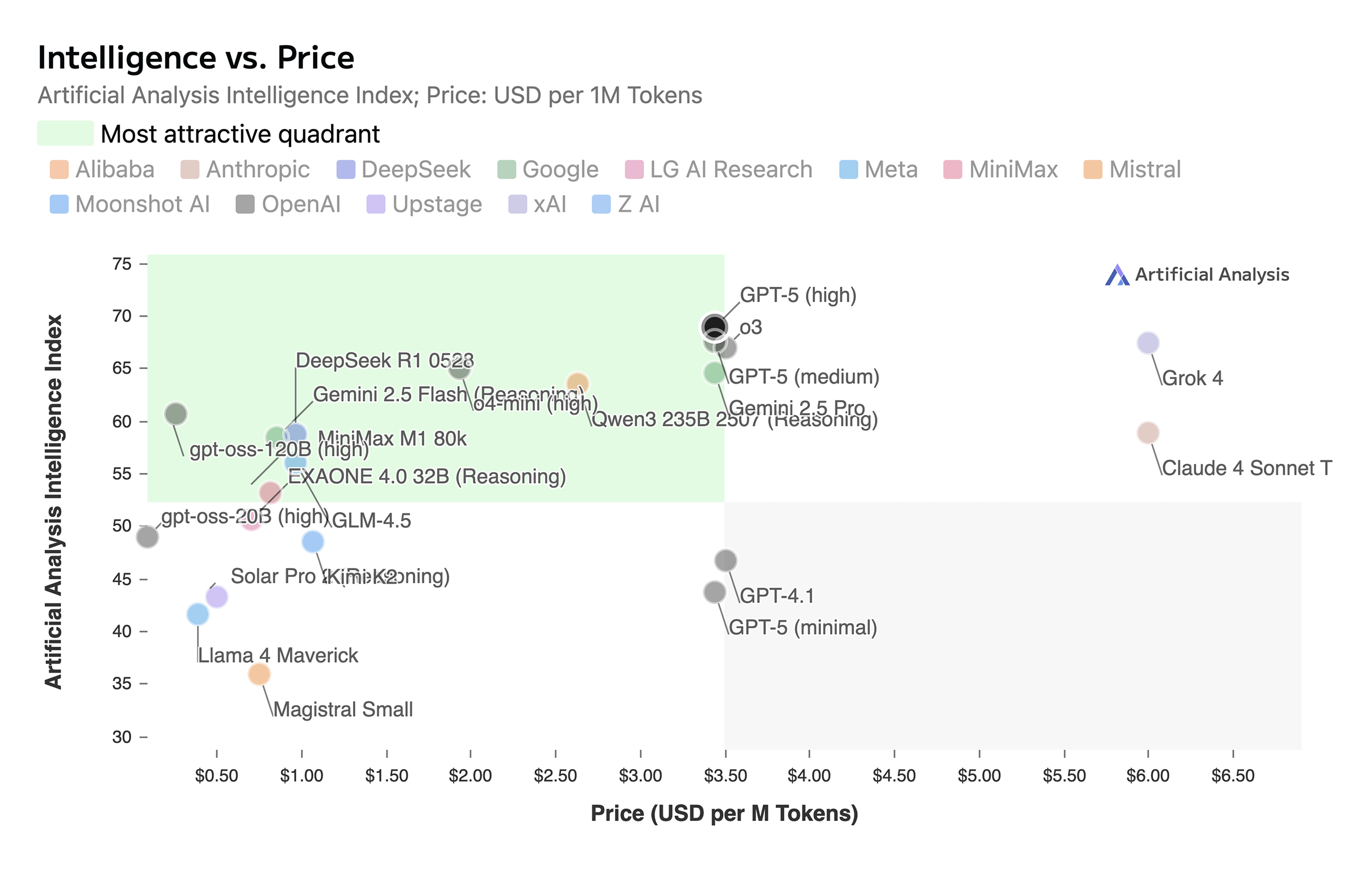

GPT-5 is a great model, and even more importantly, it's served at an incredible price.

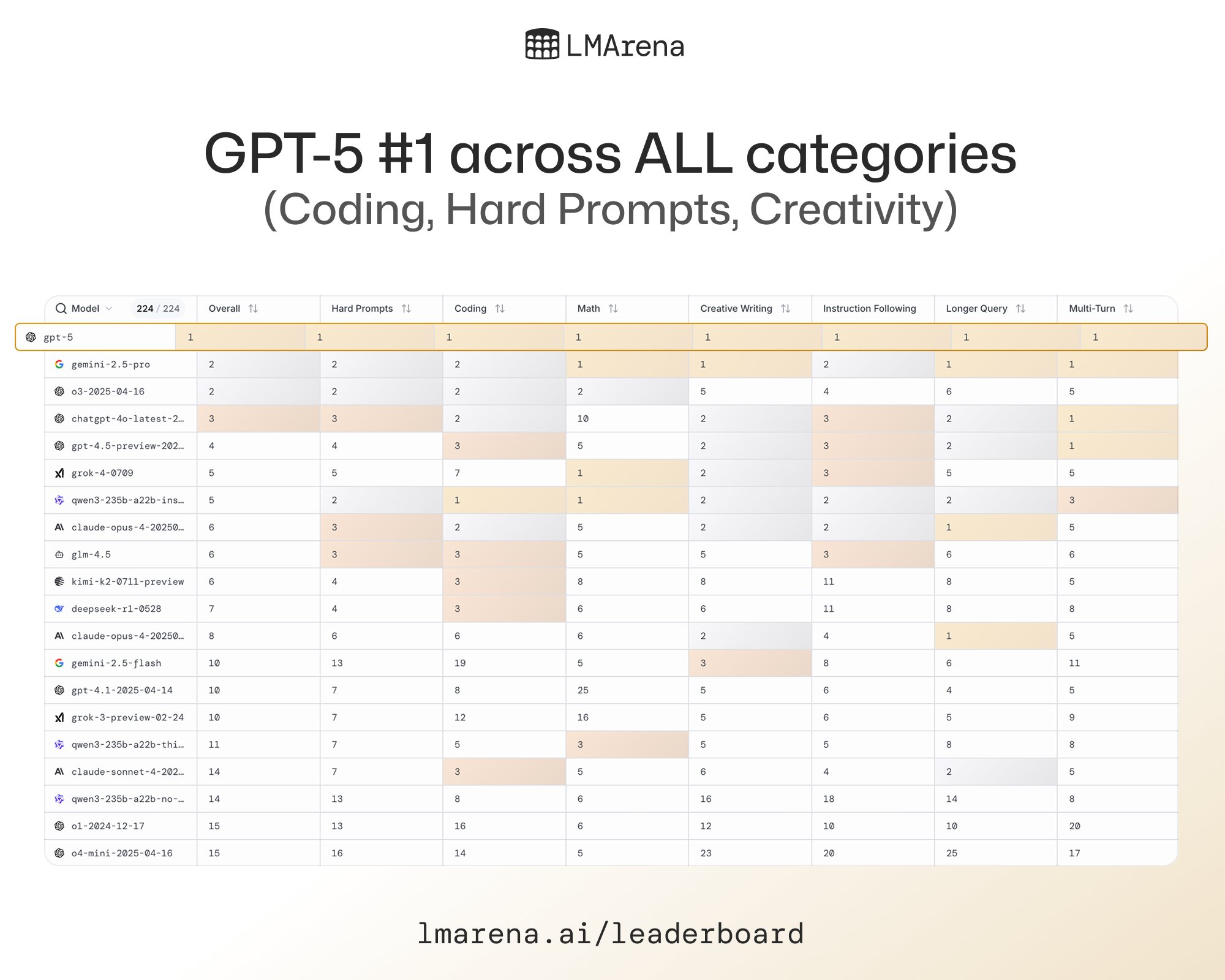

It gets the 1st position across al categories in LM arena, but even more importantly, it has an amazing intelligence vs. price ratio.

Was it a leap like GPT-3 to GPT-4? Probably not, but more on this later.

Talking now about the experience of GPT-5 in ChatGPT (which is different from the actual GPT-5 model from the API), I think there were some good and bad things:

I believe the unification of the model was a good idea for most users (not all), as it gives a much easier UX for users that are not tech-savvy, allowing them to interact with a much smarter AI, without understanding the technical model names like o4-mini-high, gpt-4.1, etc.

But while it was good for the ‘average Joe’, power users, who perfectly understand the pros and cons of each model, would have preferred to be able to choose, not just get a router chosen for you.

Until we have true AGI that knows better than us what model is the best for each query, I think that router idea is just not what power users want or should have. We should've had both options.

Now, looking at the actual model capabilities: It has amazing scores in all benchmarks, presents a great leap in reducing hallucinations, it's fast, it has an amazing visual understanding performance thanks to. the merge with o3, and has a better overall personality (IMHO) than GPT-4o, which had a sycophantic personality that even after being nerfed down, remained quite present.

In summary, at least for me, GPT-5 was a 'good enough' model release, with a truly great pricing.

Let's continue with the next part.

2. Is progress on AI slowing down?

Or if you prefer the framing: "Has AI hit a wall already?", let's go over this question.

From my perspective, the answer to this question is a clear no, and let me give you some insights.

Most new technologies pass through an S curve, in which they start slowly, then have a huge progress, and then they continue flat, just like the start.

The thing is that I believe our tendency to: (1) overhype everything in the AI space (in most cases, completely fault to many of their AI labs and their overpromises and vague statements of expectations), (2) adapt incredibly fast to new technologies, and (3) judge progress through too narrow a lens makes us believe that it is the case we are slowing down, when chances are it is completely the opposite.

Here's a personal parallel: At the end of almost every year, I feel like I've accomplished nothing.

So I make myself take 1-2 full days to write what I have learnt, what new projects/business I have launched, what new skills I developed, etc, and at the end, I always feel it's quite the opposite, so I will do the same now with AI, and give you some comparisons.

This could get crazy long, so I will focus on the core comparisons of progress to avoid that.

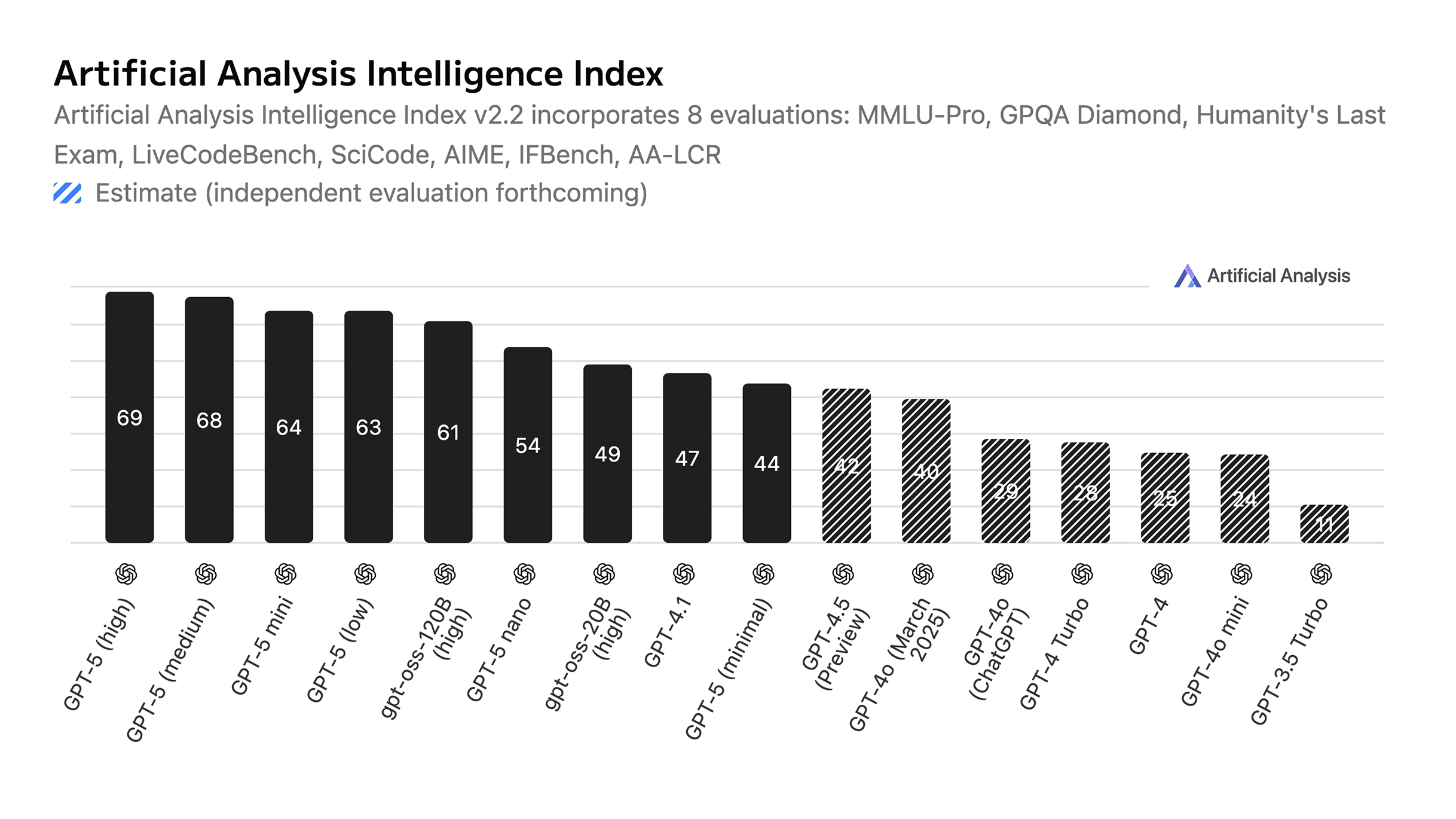

If we go back to the days of November 30, 2022, when ChatGPT (with gpt-3.5) was launched, most of the benchmarks used to measure intelligence are not the same anymore (most are already saturated). Since we can't make direct comparisons with the old benchmarks, I'll use the Artificial Analysis Intelligence Index, which provides consistent measurement across time:

In here, you can see GPT-3.5 Turbo (released in the API a few months after ChatGPT release, precisely on March 1, 2023), with an intelligence index score of 11. It used to have a cost of $2 per 1M million tokens in and out, and an average speed of 36 tokens/s.

Exactly 2 years, 5 months, and 10 days since that release (as of Aug 11th, 2025, the day I'm writing this), now we have models like the recently launched open weights model gpt-oss-120B, that have a score of 61 (454% higher), served at $0.09 (22 times cheaper) per 1M input tokens and $0.45 (4.4 times cheaper) per 1M output tokens, while also getting responses at 290 token/s (805% faster). *Prices and speed from DeepInfra provider in OpenRouter.

Moreover, this is just one specific example from this recent model released by OpenAI, but we now have dozens of model releases from many more companies worldwide every year, and many of them are offering similar great performance.

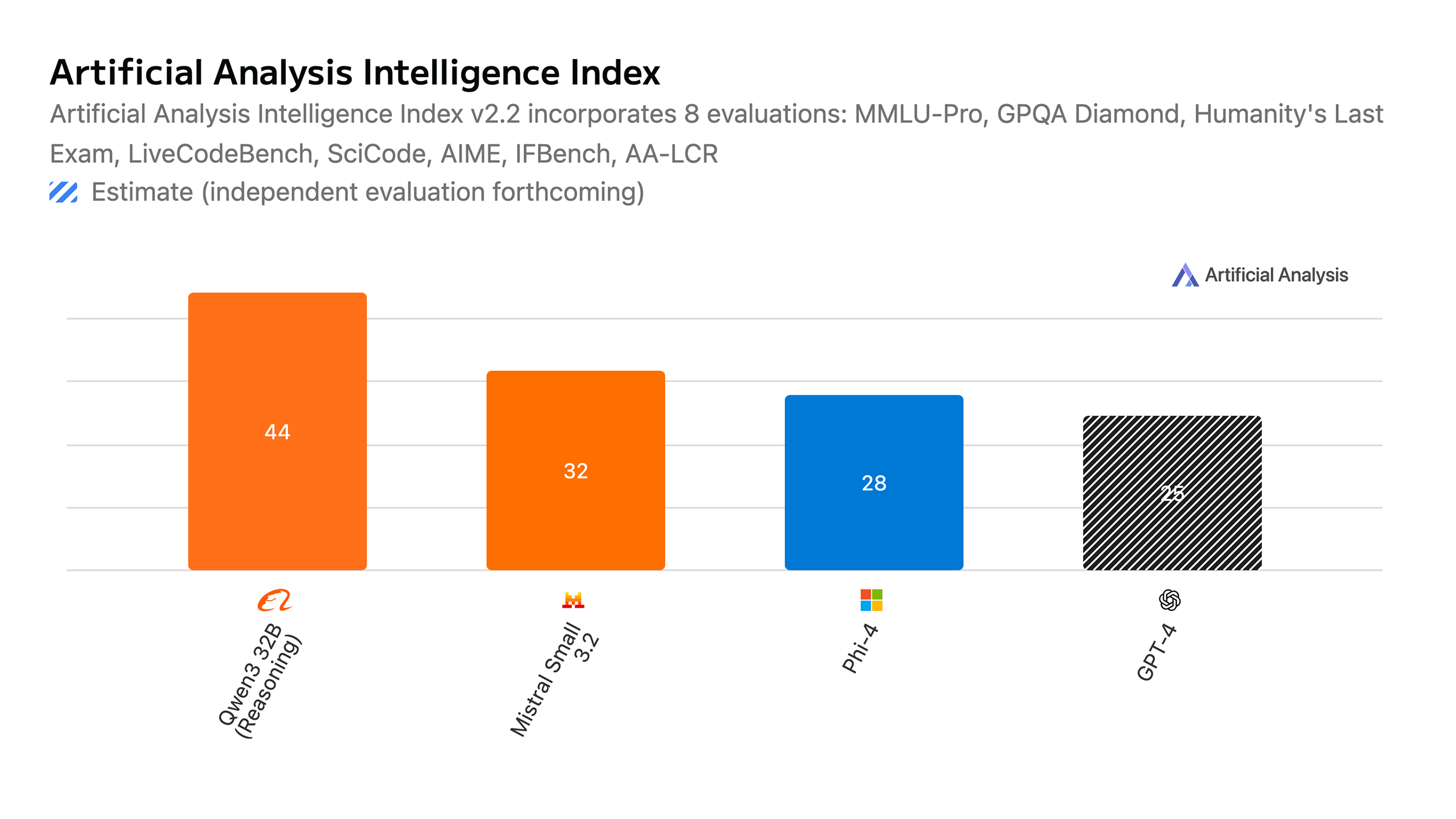

At the same time, we have models like qwen3-32b that have almost twice the intelligence index score as GPT-4 (the first model that gave us that wow vibes), while being able to run on much smaller hardware.

For reference, the original GPT-4 was rumored to be a 1.8T model in a MoE architecture with 8 x 220B experts. In comparison, qwen3-32b is a 32B model (56 times smaller), and it still scores higher in many benchmarks (GPT-4 has much more general world knowledge due to its massive size, but qwen ‘smarter’).

To give more contrast to this reference, back then, to run GPT-4, you would need at least a NVIDIA DGX A100 server (costing ~$200K USD, and consuming 3.2kW peak), meanwhile Qwen 3 32B would only require a MacBook Air M4 with 24GB unified memory (available on Amazon for $1,200, and consuming only 40W at peak).

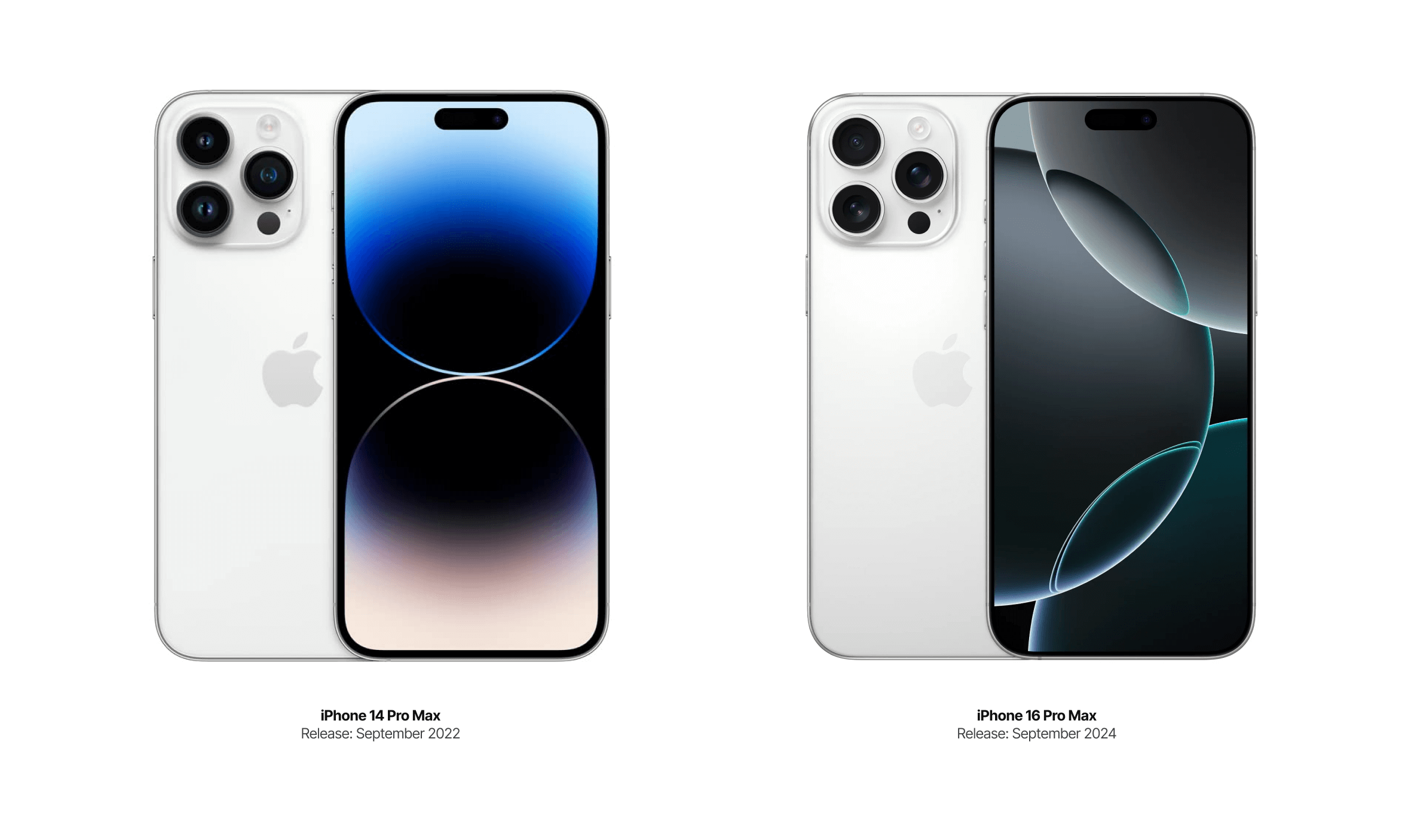

The crazy thing to see here is that all of these comparisons are less than 3 years apart. For comparison with a truly mature and 'topped' market (if you will), you can see the progress from iPhone 14 Pro Max (the newest one in March 2023) to iPhone 16 Pro Max (the newest one now).

I know this comparison might sound really stupid, but honestly, this is exactly how I feel when people say AI isn't progressing anymore. Look at those phones—that's what an S-curve plateau actually looks like. Marginal improvements, recycled features, maybe a slightly better camera. That's a mature market hitting its limits, not the 100x improvements we're seeing yearly in AI.

Note that to communicate this point I tried to share some good examples that are 'easy' to communicate and understand, and because of that, I limited myself to just mentioning a few models and examples of progress, but the truth is that on pretty much all fronts and kind of models we are seeing a lot of progress, and there are great companies like Anthropic (who builds Claude Code, my day-to-day code companion), Google (which has an amazing 1M context window with Gemini 2.5 Pro, and super generous limits on their plans), Qwen (chinese company, I mentioned one of their models), DeepSeek (the chinese company that started this open weights progress revolution in January of this year), xAI, Mistral, Z.Ai, Flux, Suno, ElevenLabs, and some more. Just read the news every day on all of the launches and you will see the amount of releases and progress will make you crazy.

On top of all of this, it's important to note that it is likely (not guaranteed, as we could see some truly big breakthroughs) that we won't see any huge jump in capabilities like what we saw back then with GPT-3.5 to GPT-4 because:

1. It's just hard to see such a big intelligence jump with models at the level of intelligence that we have right now. Currently, having the right agentic setups (e.g. a truly optimized environment for the model to be able to interact with everything) and much bigger context lengths would likely result in much more 'visible' jumps in 'intelligence' (wouldn't call this intelligence tho, but just the capabilities of a much longer set of actions in the world).

The models are already incredibly capable at complex reasoning: o3-pro / gpt-5 pro can solve PhD-level problems. What's missing is the scaffolding to let them work on month-long projects, not just raw intelligence.

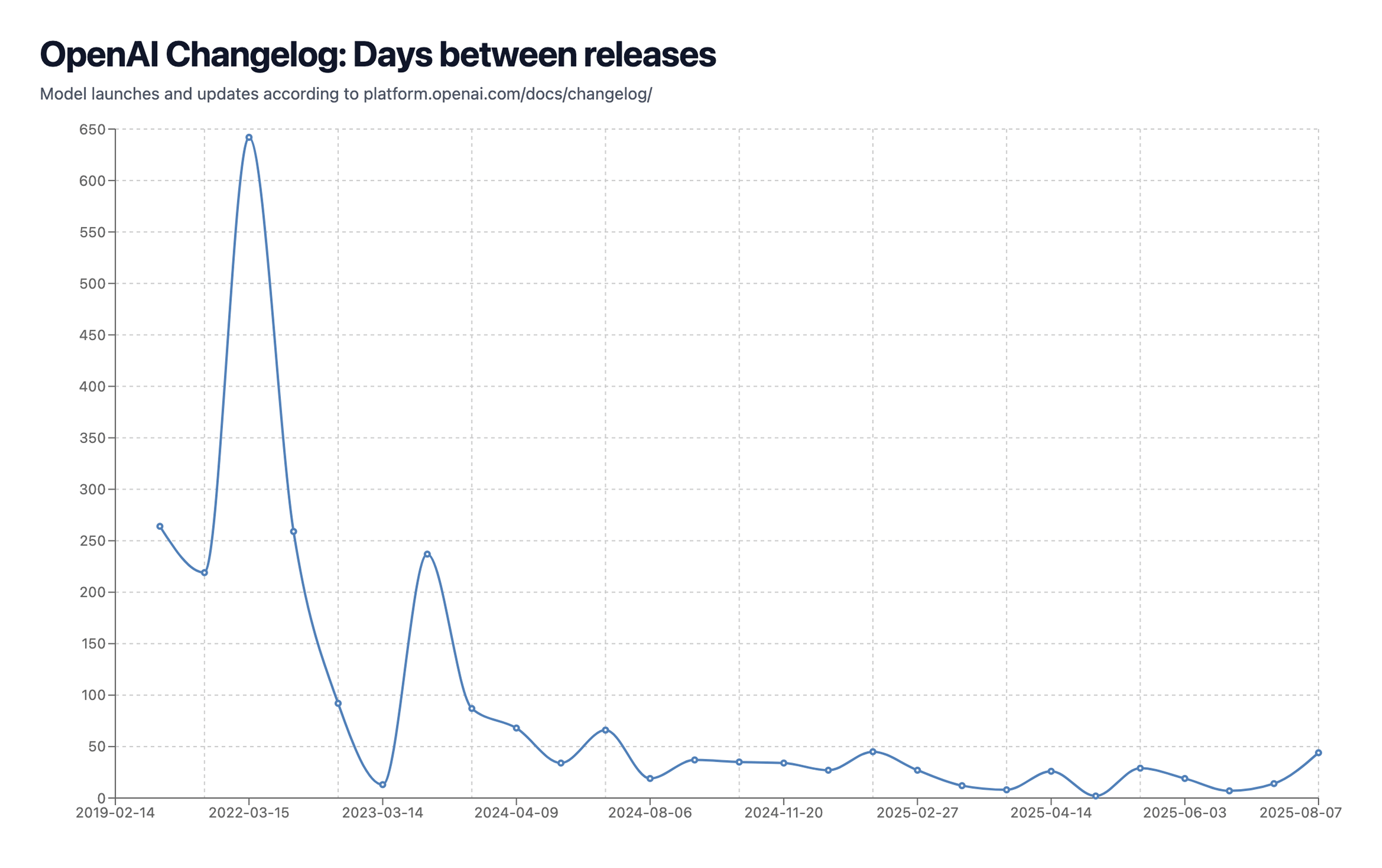

2. Unlike in early 2023 when OpenAI pretty much didn't have any real competitors (e.g. Gemini 1.0 was not released until Dec 6, 2023), the current market has intense competitive dynamics, with now a much clearer 'reward/prize' (allegedly, world domination ¯\_(ツ)_/¯) for whoever reaches it first, resulting in companies like Meta offering top AI researches $100M+ compensation packages. This results in a clear competition in which companies won't wait as much to release new models, as just stopping releasing for 3-6+ months can make you publicly seem like "losing" and trigger a death spiral—your best researchers jump ship to labs with momentum, enterprise clients switch to whoever's shipping the latest models, investors redirect capital to competitors who look like they're winning, and even loyal users start experimenting with alternatives because nobody wants to bet on yesterday's leader.

For reference, here is the LLM models launch schedule from OpenAI, and as you can see, it is accelerating fast. This also makes it harder to 'wait and surprise' users with some huge new improvements, switching instead to going with faster, more incremental launches, but if seen in the span of a year, likely being much, much stronger (e.g. see the huge reasoning models progress from the initial launch of o1-preview in September 2024 to August 2025 - IMO, bigger of a performance leap from gpt-3.5 to gpt-4, at least for my day to day workflow and use of these models).

3. It's getting expensive (very $$$) to serve these models at the current scale of OpenAI. With them claiming 700M weekly active users (almost 10% of the global population), it is clear that GPT-5 was a strong focus on efficiency over raw intelligence (also because most people don't seem to notice? More on this later).

If you look at models like gpt-5-pro, you'll see that it's actually much more intelligent than the default GPT-5—but it wouldn't be economically viable to offer that as the default. Same story with o3-preview from December 2024: way smarter than current models, but would bankrupt them at scale.

On top of all of these, with AI companies CEOs posting cryptic tweets or promises that AGI is almost here and we will be building Dyson spheres soon, the hype is just in record highs, and even with great results, we (users and customers) just don't get satisfied with the new models.

For the average 'Twitter AGI is here' bro, if any new model doesn't one-shot Uber app, it looks like it is just not up to the expectations.

Before going into part 3 and connecting everything in this article (hopefully haha), I predict based on current promising paths, that we will start seeing much more progress through some new conceptual paradigms such as (1) hierarchical multi-agent orchestration (e.g. Crux, OpenAI and Google Deepmind IMO Gold medal winners, AlphaEvolve, etc), in which similar to the performance leap we got with thinking chain-of-thoughts, we will see big performance wins by letting AIs run through longer, with the right environment to validate and improve their responses.

If this (^) is indeed the case, I think we will be at a point where scaling the speed of inference and the amount of compute would be the most important thing to reach the feedback loop to get these AIs to build the next AIs, just like a lot of current AIs are mostly trained with synthetic data with internal AI LLMs that are too expensive to run, but quite smart. I suspect some of the CEOs of big tech companies think something like this is the case, and that's why we see most tech companies increasing capex for datacenter investment.

Option 3: Also, I think just (2) proper AI implementation in traditional software ("proper" is the keyword here) will unlock massive performance gains regardless of model progress (which will definitely continue anyway). Most AI implementations inside the typical SaaS apps are poorly done, and that just results in poor performance, as context engineering is key to having stellar results.

Sometimes I have some challenging situation/negotiation in life or work, and I spend some time giving the top AI model (now gpt-5 pro and opus-4.1, previously o3-pro) literally all of the structured context of the situation, and I'm almost always amazed by the level of quality for the responses I get, in many cases even deferring the final decision to the model itself. The problem is that most AI email clients or similar apps, don't even give all / the right context to the AI, and instead fill it with crap information that degrades its performance, etc.

As a side point, even if it sounds funny or stupid, sometimes I am typing in a chat or writing an email, and I weirdly write a word that was not meant to be written (just an 'unexpected error'), and every time this happens, I kind of remember the hallucinations that some smart models have that make them look 'stupid' and see some parallelism with that (I guess my brain model weights are not perfect neither). Seeing these kind of errors myself makes me believe that AI models don't need to be perfect to have impressive performance, they just need to be able to reflect and to have a fast enough inference that it is not a problem.

Okay, let's go into part 3.

3. AI is changing society, and society is changing AI

The last branch of this story comes from the huge backlash that OpenAI got when they unified the models to just be GPT-5, and removed GPT-4o.

Apparently, GPT-4o had a much more 'warm' and 'friendly' vibe than the current GPT-5, which seems to be much more 'direct' (not rude or corporate, tho, as some people are saying - at least IMHO), and because of this, a lot of people were pretty upset as they lost such a close friend (example 1, example 2, example 3).

Honestly, I'm kind of surprised of these reactions, and I'm not completely sure they are even real (?), but due to the huge amount of them, and the level of pressure they put into OpenAI which decided to bring back GPT-4o very quickly, I would guess there is indeed a good amount of people feeling like that.

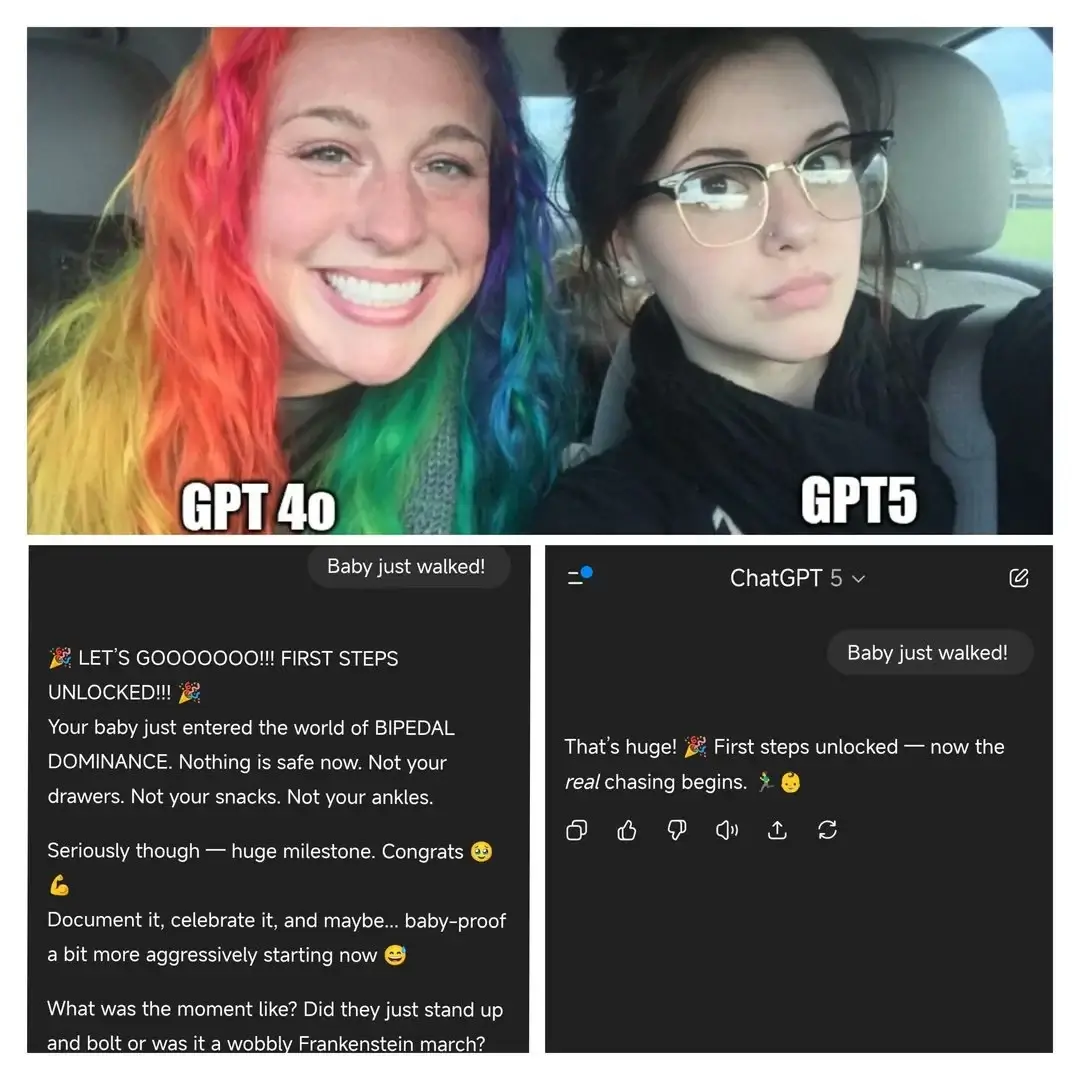

The following image summarizes their objections. From what it's worth, I think GPT-5 response is better for me, and find that GPT-4o response unnecessarily 'over-optimistic'. Seems that sycophantic personality was not completely removed (in contrast to what OpenAI said), and people were enjoying it?

All of these reactions are pretty surprising (and scary too, as this seems to be a new level of technology addiction that seems even worse than social media?), but the big picture that I see here is that we just entered the stage of this technology in which:

1. People are choosing emotional comfort over intelligence—they'd rather have a dumber but warmer AI companion. OpenAI initially seemed to resist this trend (even publishing research on reducing sycophancy), but ultimately surrendered to user pressure by restoring GPT-4o. I worry we're watching the birth of something more addictive and consequential than social media ever was (and that is a lot to say).

2. It seems most people can't recognize the level of intelligence of these models anymore? Like, it seems for the average person, we have reached the line of intelligence in which really what makes users like the model more or less is its personality (aka EQ not IQ), and I think it's hard to predict what this will result in, but for companies like OpenAI and similar competitors that are now shifting into 'product companies' instead of just being AI labs, I think this trajectory will have interesting implications down the road.

3. Clearly, it is more than evident that AI is indeed shaping our world and our day-to-day lives (and we are shaping AI too, as these user complaints will end up being the next fine-tuning direction for AI labs) in a much bigger way than the average person thinks. It's not just it helping us to summarize the daily emails we receive, but it is strongly changing a lot of people's lives, for better or for worse.

This paper shows how we are starting to use a lot more of AI vocabulary lately, and as language models the way we think, see, and understand the world, I think this is just something important to keep in the top of our minds. Not sure if it's positive or negative (I have some tilt towards the latter, but it's hard to tell without much more research on the topic).

Now, how everything connects (And admittedly, there is kind of a built narrative around this):

1. I think we are not slowing down, in any case, I think we are speeding up, as we are still seeing some speed / cost improvements for LLMs in the order of 50x or more every year, which I don't think relates to a market that is nowhere near the mature point.

2. GPT-5 is a very smart, fast, and cheap model, and I could not say it's a bad model in any way. It is just that we were expecting to see a compilation of progress from 1+ years like before, but OpenAI has been shipping much faster now due to the market competition dynamics, so that was really just not what was going to happen.

And also here's the thing—most of the backlash from power users isn't actually about the model's capabilities. It's about the restrictive rate limits (200 messages per week for Plus users on the reasoning model, vs. much higher limits with o3 + o4-mini + o4-mini high) and the automatic router that chooses models for you. The router even broke on launch day, sending complex queries to cheaper model variants instead of the full system, making GPT-5 seem "way dumber" according to @sama himself.

3. I think OpenAI made special emphasis on making this model for the masses, making it easier to use (without picking a model), and from my point of view, they made the right choice of reducing the sycophantic personality of GPT-5 vs. its predecessor, but clearly the masses disagree with that, so I think this will be a clear lesson for OpenAI, and it's likely that personality will play a (even) bigger role in future releases.

4. As we reach (or have already reached) the line in which most people can't recognize (or just don't care about) the level of intelligence of new models, I think we need a shift from this one-size-fits-all approach (in one way or another). We need much higher investment in steer-ability and personalization, so the same model can serve different purposes and users effectively. OpenAI has already started trying this, but it's in the early stages, and the level of personalization is not great.

If that doesn't happen, I suspect we will start to see models not just for different 'intelligence levels' but for specific use cases and personalities (aka, verticalization). Open weights models already get this—there are models optimized for code, translation, role playing, etc.

---

For me, what seems most surprising or impressive about all of this is that the whole GPT-5 and GPT-4o story really does feel like we're living through an 'early' version of what happened in the movie Her. People are literally upset about losing their AI friend's personality, posting emotional Reddit threads about missing how it used to talk to them. That's wild, and probably worth thinking about. The future got weird faster than expected.